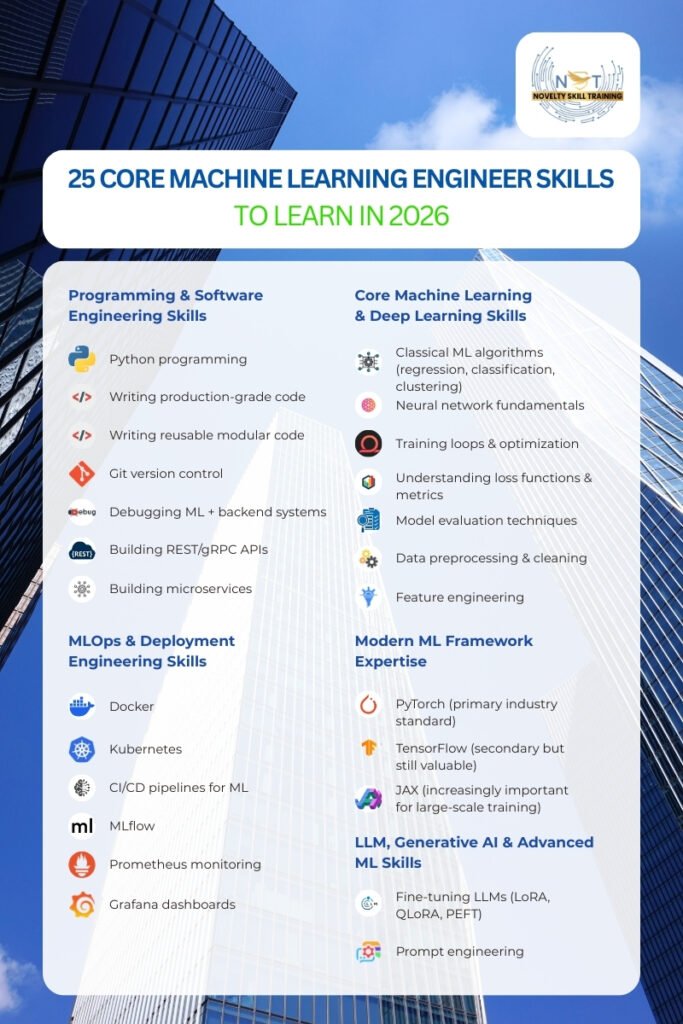

The role of a Machine Learning Engineer has expanded so rapidly that knowing algorithms and training models now represents only a small part of what the job actually requires. Traditional skills for Machine Learning Engineers aren’t sufficient today as modern ML engineers are expected to work across software engineering, MLOps, distributed systems, observability, deep learning frameworks, and production infrastructure.

As UAE is accelerating AI adoption across government, telecom, finance, healthcare, logistics, and energy sectors in a mission to become the global hub for AI, future ML aspirants must equip themselves with this broader skillset. Established organizations in the region like G42, Microsoft, Emirates Group, Careem, Etisalat, have high demand for ML Engineers who can not only “build models” but deliver end-to-end systems that are reliable, scalable, and aligned with real business needs. To understand what employers are truly looking for, we analysed 20 ML Engineer job descriptions across top UAE companies and identified the skills that consistently appear in hiring requirements.

These 25 skills represent the new expectations for ML engineers entering the 2026 UAE job market. Mastering these skills help you build, deploy, and maintain AI systems at scale in one of the world’s most ambitious AI ecosystems.

Key Skills for Machine Learning Engineer

Programming & Software Engineering Skills

1. Python programming

Python programming has been a foundational skill for machine learning engineers. It enables you to write automation scripts, preprocess data, build training workflows, develop APIs, and deploy models into production.

Python remains dominant in 2026 because the entire modern ML stack, LLMs, multimodal models, vector search, distributed training, runs on Python interfaces. Even new performance-optimized runtimes (vLLM, PyTorch 2.x, JAX, Triton kernels) expose Python-first APIs, so engineers can scale systems without shifting to lower-level languages.

In the current ML ecosystem, Python powers every stage of the machine-learning lifecycle, from data preparation to deployment. Data pipelines are built using Python tools like Pandas, PySpark, and custom ETL scripts; model training happens in Python-first frameworks such as PyTorch, TensorFlow, and JAX; and modern LLM workflows like fine-tuning, RAG, and embeddings depend heavily on Python ecosystems including HuggingFace, LangChain, LlamaIndex, and Chroma.

Production deployment also relies on Python through FastAPI, Flask, and gRPC-based microservices, while inference optimization uses Python interfaces for ONNX Runtime, TensorRT, and accelerated runtimes. Even monitoring and automation tools—MLflow, Airflow, Prefect, and custom observability scripts—integrate primarily through Python, making it the universal language that binds the entire ML pipeline together.

Deep-learning framework surveys demonstrate PyTorch—built on Python—continues to lead the industry, and cloud platforms (AWS SageMaker, Vertex AI, Azure ML) all provide Python SDKs as their primary interface. For working professionals, this means one language gives access to model training, deployment, scaling, and monitoring across all major platforms.

Why this matters in the UAE:

Many UAE companies operate multilingual, enterprise-scale AI systems, and rely heavily on Python-based pipelines for deploying Arabic-aware models and real-time inference services.

Python Programming – Overview

| Skill | Python Programming |

|---|---|

| What it Involves | Core Python, scripting, OOP, debugging, virtual environments, writing clean reusable code, ML libraries (NumPy, Pandas, PyTorch, TensorFlow), building automation scripts and basic APIs |

| Where ML Engineers Need it | Data pipelines, Model training, LLM fine-tuning + RAG workflows, Model deployment, Inference optimization, Monitoring & automation |

| How to learn | Free Resources: Think Python, 2nd Edition The Python Tutorial |

Machine Learning code involves workinMachine Learning code involves working with a huge number of algorithms, but Python simplifies the process for developers in their testing.

- Kevin Holland Key Accounts Director at Argentek LLC New York

(LinkedIn: https://www.linkedin.com/pulse/importance-python-machine-learning-kevin-holland/)

2. Writing Production-Grade Code

Writing production-grade code has been one of the most essential skills for machine learning engineers. Unlike research code, production ML code must be clean, modular, testable, optimized, and scalable.

Today, ML engineers must write robust, scalable code that integrates backend systems and handles real-time data, ensuring maintainability across large teams. With modern ML stacks like PyTorch 2.x, JAX, and Kubernetes, engineering discipline is key to building production-ready systems, not just prototypes.

Production-grade engineering ensures consistency across the entire ML lifecycle. Clean and modular code reduces bugs; structured components improve reproducibility; typed functions speed collaboration; and automated testing prevents model failure during deployment. As companies shift LLMs, RAG systems, and multimodal pipelines into production, the ability to engineer maintainable code has become a defining skill separating ML researchers from ML engineers.

Many industries emphasize that ML engineers should have software-engineering fundamentals (clean code, modularity, testing, production readiness) in addition to ML knowledge. For working professionals, this is the core competency that turns ML prototypes into real products.

Why this matters in the UAE:

Enterprises like Emirates Group, Etisalat and banks prioritise ML engineers who can build stable, scalable systems because their AI workloads run in high-availability, compliance-driven environments.

Writing Production-Grade Code – Overview

| Skill | Writing Production-Grade Code |

|---|---|

| What it Involves | Clean code, modular functions, OOP, typing, reusable components, testing, debugging, optimizing training/inference code, writing maintainable pipelines |

| Where ML Engineers Need it | Data pipelines, training workflows, LLM/RAG systems, backend API integration, deployment scripts, inference servers, monitoring pipelines |

| How to learn | Free Resources: Clean Code (free summaries) Structure & Interpretation of Computer Programs (free). |

3. Building REST/gRPC APIs

Building REST and gRPC APIs has been essential for ML engineers because every production ML system needs an interface to serve predictions, expose model endpoints, or integrate with downstream applications. REST is widely used for web-based ML services, while gRPC provides high-performance communication for large-scale, low-latency inference systems.

Modern ML deployments—especially LLM inference servers, vector search systems, and distributed model-serving platforms—rely heavily on gRPC due to its speed and efficiency.

An article titled “Serve Machine Learning Models via REST APIs” shows how a typical ML model can be wrapped in a REST API (e.g. using FastAPI) to go from a development prototype to a production-ready service — enabling immediate consumption by other applications.

Recent developments include the rise of FastAPI for Python-based ML services, the adoption of gRPC in high-performance LLM serving (vLLM, TensorRT-LLM, Ray Serve), and production-grade API orchestration for RAG systems.

Why this matters in the UAE:

Dubai and Abu Dhabi’s AI ecosystems depend heavily on API-driven services—smart-city integrations, telecom AI products, aviation operations—making API-first ML deployment essential.

Building REST and gRPC APIs – Overview

| Skill | Building REST/gRPC APIs |

| What it Involves | FastAPI, Flask, gRPC, protocol buffers, request/response validation, authentication, rate limiting |

| Where ML Engineers Need It | Model serving, real-time inference, microservice integration, LLM endpoints, vector search pipelines |

| How to Learn | Free Resources: Pro Git (Free Book) Git Documentation (free) |

4. Debugging ML + Backend Systems

Debugging for ML engineers isn’t just about fixing errors—it includes tracing latency bottlenecks, diagnosing model drift, profiling GPU/CPU usage, and debugging distributed training failures. ML systems fail differently than typical software: data issues, numerical instability, serialization errors, or API mismatches.

A 2025 global survey of ML practitioners found that production ML systems frequently experience runtime issues like latency spikes, resource bottlenecks, and silent model degradation — underscoring why modern LLMs and distributed pipelines make robust debugging, monitoring, and observability more critical than ever.

Recent developments include profiling tools in PyTorch 2.x, OpenTelemetry tracing for ML systems, GPU debuggers (Nsight), and observability tools for LLM inference engines.

Debugging ML + Backend Systems — Overview

| Skill | Debugging ML + Backend Systems |

| What it Involves | Tracing logs, profiling, analyzing GPU utilization, debugging API errors, handling failures in distributed jobs |

| Where ML Engineers Need It | Training failures, inference errors, performance slowdowns, memory leaks, API crashes |

| How to Learn | Free Resources: OpenTelemetry Docs PyTorch Debugging Guide |

5. Writing Reusable Modular Code

Reusable modular code has been the foundation of scalable ML systems. ML engineers must break down complex workflows—preprocessing, training, evaluation, inference—into clean, maintainable modules.

The Machine Learning Code Understanding (2024) studied how applying proper software‑engineering design principles (e.g. modularity, SOLID) in ML projects affects code comprehension and maintainability. Their controlled experiments with 100 data‑scientists showed that code refactored with such principles was significantly easier to understand and maintain vs messy “notebook‑style” ML code.

Recent trends emphasize modular training loops, reusable LLM fine-tuning wrappers, shared vectorization modules, and plug-and-play inference components.

Writing Reusable Modular Code — Overview

| Skill | Writing Reusable Modular Code |

| What it Involves | Functions, classes, modular pipelines, separation of concerns, code refactoring, documentation |

| Where ML Engineers Need It | Training frameworks, preprocessing modules, model wrappers, inference servers, batch/stream pipelines |

| How to Learn | Free Resources: SICP (Free Book) The Hitchhiker’s Guide to Python (free online) |

6. Git Version Control

Git version control has been the backbone of collaboration and reproducibility in machine learning engineering. It allows you to track changes, manage branches, review code, and maintain consistent versions of ML pipelines, training scripts, and model artifacts.

Today, Git goes far beyond basic commit and push operations. ML engineers rely on Git-integrated workflows for experiment tracking, data versioning, CI/CD pipelines, and deployment automation.

According to DVC (Data Version Control); DVC as a Git-based tool for data, models, and pipelines designed to “make projects reproducible” and “help data science and machine learning teams collaborate better,” explicitly positioning Git as the backbone over which ML-specific versioning is layered.

Recent developments show Git becoming deeply embedded in ML tooling: GitHub Actions automates ML pipelines, DVC integrates Git-like versioning for datasets, and GitOps frameworks (like ArgoCD and Flux) drive continuous delivery of ML systems. These trends make Git an essential operational skill for ML engineers building scalable and reliable AI products.

Git Version Control – Overview

| Skill | Git Version Control |

| What it Involves | Branching, merging, pull requests, version tagging, conflict resolution, Git workflows, GitHub/GitLab CI |

| Where ML Engineers Need It | Experiment tracking, model versioning, deployment pipelines, team collaboration, GitOps-based ML deployments |

| How to Learn | Free Resources: Pro Git (Free Book) Git Documentation (free) |

7. Building Microservices

Building microservices is crucial for ML engineers today because modern ML systems are not simply run as notebooks; they are deployed as independent, scalable services. Microservices enable ML engineers to break down model inference, feature extraction, preprocessing, and monitoring into independent, scalable components.

This modular approach ensures ML systems remain reliable and efficient when handling millions of requests in real-world environments such as e-commerce platforms, financial services, autonomous vehicles, healthcare systems, and streaming services.

In ML engineering, microservices enable reliable model deployments, real-time inference, multi-model routing, vector search integrations, and modular RAG pipelines.

According to Deploying AI/ML in Microservices comparing methodologies and tools for AI/ML microservices deployment across “leading companies,” showing that microservices-based deployment is becoming a norm.

Recent developments include microservice frameworks like FastAPI, BentoML, Ray Serve, and KServe, which help ML engineers build scalable model-serving layers. Cloud-native approaches—Kubernetes, Docker, auto-scaling, and service mesh frameworks—have made microservices the de facto deployment model for production ML, especially for LLMs and multimodal systems.

Why this matters in the UAE:

Most large UAE organisations run microservice-based architectures, so ML engineers must plug models into existing distributed systems rather than reinventing new pipelines.

Building Microservices – Overview

| Skill | Building Microservices |

| What it Involves | Service design, FastAPI/Flask, gRPC APIs, containerization, inter-service communication, autoscaling, service orchestration |

| Where ML Engineers Need It | Model inference services, RAG pipelines, vector search services, multimodal inference endpoints, monitoring services |

| How to Learn | Free Resources: Microservices.io (Free Patterns Guide) FastAPI Documentation (Free) |

| AI and ML Training | Program Duration: 6 Months |

| Build a strong, practical foundation in AI and machine learning through structured modules, hands-on projects, and guided mentorship. This program takes you from Python fundamentals to applied machine learning, deep learning with TensorFlow/Keras, NLP basics, and real-world model deployment using modern tools. By the end, you’ll be able to analyze data, build ML and DL models, and deploy functional AI applications with confidence. Mode of Delivery: Classroom, Live Online, Blended | Skills you’ll build: Machine Learning Foundations, Python Programming, Data Preprocessing, Feature Engineering, Supervised & Unsupervised Learning, Neural Networks, Deep Learning with TensorFlow/Keras, Model Evaluation, Natural Language Processing (NLP), Computer Vision Basics, Model Deployment with Flask/FastAPI, Docker Fundamentals, Intro to Cloud (AWS Sagemaker), End-to-End ML Project Development Other Courses Cybersecurity AI ML in Healthcare |

Core Machine Learning & Deep Learning Skills

8. Classical ML Algorithms (Regression, Classification, Clustering)

Classical machine learning algorithms form the analytical foundation of ML engineering. These include regression (predicting continuous values like prices or demand), classification (predicting discrete labels like fraud/not fraud), and clustering (grouping similar data points without labels, such as segmenting customers).

Even though deep learning dominates headlines, most real-world ML products—fraud detection, churn prediction, recommendation scoring, risk modeling—still rely on these algorithms because they are fast, interpretable, cost-efficient, and reliable at scale, especially for low-latency and explainable AI requirements.

A 2025 article from Data Science Decoder argues that classical ML methods such as linear regression, decision trees, logistic regression continue to outperform deep learning in many real‑world settings — especially when data is structured, datasets are small/moderate, interpretability is required, or computational resources are limited.

– Iain Brown PhD

AI & Data Science Leader | Adjunct Professor | Author

(LinkedIn: https://www.linkedin.com/pulse/forgotten-models-why-classical-machine-learning-still-iain-brown-phd-vwibe/ )

Recent developments include scaled-up implementations using tools like Dask with scikit-learn for handling larger datasets, improved clustering methods for high-volume data, and new integrations such as Scikit-LLM that blend classical ML with LLM-powered feature extraction. These approaches enable regression, classification, and clustering models to complement modern pipelines by providing fast baselines, structured evaluations, and lightweight routing mechanisms within larger AI systems.

Classical ML Algorithms – Overview

| Skill | Classical ML Algorithms |

| What it Involves | Regression, classification, clustering, anomaly detection, model baselines, scikit-learn |

| Where ML Engineers Need It | Baseline modeling, fraud detection, ranking, forecasting baselines, quick prototypes, feature-based ML systems |

| How to Learn | Free Resources: Introduction to Statistical Learning” — ISLR (Free Book) |

Neural Network Fundamentals

Neural network fundamentals have been essential for ML engineers for layers, weights, activations, and gradient flow—are crucial for diagnosing issues, tuning hyperparameters, and optimizing AI models like LLMs, computer vision, and speech systems.

According to Systematic Survey on Debugging Techniques for ML Systems, real-world debugging issues in ML systems (from GitHub repos, practitioner interviews). It reports that nearly half of identified ML debugging challenges remain unresolved — and a large share of problems stem from “model and training process faults,” which requires deep insight into neural‑network internals to diagnose.

Recent developments include PyTorch 2.x compile modes, enhanced autograd systems, better initialization strategies, and new architectures such as MLP-Mixers, ViTs, and state-space models that still rely on core neural network principles.

Neural Network Fundamentals – Overview

| Skill | Neural Network Fundamentals |

| What it Involves | Layers, activations, gradients, initialization, forward/backward propagation, regularization |

| Where ML Engineers Need It | Training DL models, debugging convergence, fine-tuning LLMs, optimizing model performance |

| How to Learn | Free Resources: Dive Into Deep Learning (Free Book) |

“Artificial neural networks learn through functions, weights, and biases, adjusting these parameters so the model can better capture the relationship between inputs and outputs — by scaling how strongly each input influences the neurons (weights), shifting neuron activation thresholds to improve flexibility (biases), and applying non-linear activation functions to model complex patterns that linear models cannot express.”

– Doug Rose

Author | Artificial Intelligence | Data Ethics | Agility

(LinkedIn: https://www.linkedin.com/pulse/understanding-importance-artificial-neural-network-weights-doug-rose-dzbqe/)

Training Loops & Optimization

Training loops and optimization define how models learn. ML engineers must design and modify these loops for tasks like distributed training, fine-tuning, and maintaining efficient production pipelines.

Optimizers like SGD, AdamW, and fused optimizers are critical for controlling model training speed, stability, and quality. The right optimizer ensures faster training, better convergence, and efficient use of resources in production.

GPU Efficiency in Machine Learning article examines how inefficient GPU usage in ML training workflows leads to computational waste and increased cost, and argues that organizations must optimize resource utilization (e.g. via dynamic batching, mixed-precision training, efficient data pipelines) to make model training sustainable, cost-effective, and performant.

Recent developments include fused optimizers in PyTorch 2.x, distributed optimizers in DeepSpeed/FSDP, FlashAttention-2, and training loop simplification with PyTorch Lightning and JAX Flax/Optax.

Training Loops & Optimization – Overview

| Skill | Training Loops & Optimization |

| What it Involves | Writing loops, optimizers, schedulers, gradient clipping, AMP training, distributed optimization |

| Where ML Engineers Need It | Fine-tuning, LLM training, distributed GPU jobs, experiment scaling |

| How to Learn | Free Resources: PyTorch Training Loops (Free) |

11. Understanding Loss Functions & Metrics

Loss functions and metrics define how well a model performs. Engineers must pick the right loss for the right task, classification, ranking, regression, contrastive learning, generation, and the right metrics for production evaluation.

According to good practices for evaluation states poor evaluation metric or loss-function selection remains a key risk in ML development — as modern guidelines and best-practice reviews show, mis-aligned metrics or ill-designed evaluation protocols often lead to misleading offline performance and failures upon deployment.

Recent developments include LLM evaluation frameworks, contrastive losses in embedding models, reward models in RLHF, and new metrics for generative AI (BLEURT, Rouge-LSum, Embedding-based evaluation).

Loss Functions & Metrics – Overview

| Skill | Loss Functions & Metrics |

| What it Involves | Cross-entropy, MSE, margin/ranking losses, contrastive loss, accuracy, F1, ROC-AUC, LLM metrics |

| Where ML Engineers Need It | Training, evaluation, deployment decisions, RAG evaluation, LLM scoring |

| How to Learn | Free Resources: Metrics & Losses Overview (Free) Deep Learning Book — Loss Functions (Free) |

12. Model Evaluation Techniques

Model evaluation techniques ensure ML systems work on real data, not just training sets. Evaluation covers test strategies, cross-validation, robustness testing, edge-case analysis, drift detection, and fairness checks.

This skill is critical because production ML systems face real-world challenges that offline notebook metrics cannot detect. According to the Rexer Analytics survey reported by KDnuggets, only 32% of data scientists say their ML models “usually deploy”, and just 22% report that their more ambitious, transformational ML initiatives make it into production.

Models in production degrade due to data drift, concept drift, and adversarial patterns, meaning ML engineers must rely on robust evaluation techniques—statistical drift tests (KS test, Chi-square, PSI, Jensen–Shannon Divergence), continuous monitoring, threshold optimization, and performance-by-segment validation—rather than static validation scores.

For LLMs, traditional accuracy metrics break down entirely as models generate confident hallucinations. This has led to the adoption of specialized evaluation frameworks such as HHEM, TruthfulQA, and real-time judgement pipelines. Modern companies now expect ML engineers to design, implement, and maintain these evaluation systems, so model behavior remains aligned with business impact, safety, and reliability.

Therefore, mastery of Model Evaluation Techniques is essential because it determines whether a model can survive real-world conditions, avoid silent failures, and maintain trustworthy performance long after initial deployment.

Recent developments include structured LLM eval tools, hallucination detection frameworks, statistical evals for RAG, and pipeline-level evaluation in production systems.

Model Evaluation Techniques – Overview

| Skill | Model Evaluation Techniques |

| What it Involves | Cross-validation, holdout testing, error analysis, stress tests, bias checks, LLM evaluation frameworks |

| Where ML Engineers Need It | Deployment readiness, monitoring, LLM/RAG evaluation, ranking systems, video/audio models |

| How to Learn | Free Resources: A Review of Model Evaluation Metrics |

13. Data Pre-processing & Cleaning

Data pre processing and cleaning is the foundation of all ML work. Over 70% of ML engineering time goes into preparing data for training, because models fail when input data is messy, inconsistent, or unstructured.

With deep learning, LLMs, and multimodal AI becoming standard, clean and well‑structured data is non-negotiable. In production systems, companies expect ML engineers to build robust preprocessing pipelines, handling normalization, deduplication, tokenization or feature extraction, for both batch and real-time data flows.

Recent developments include large-scale tokenization frameworks, text normalization pipelines for LLMs, image/video preprocessing libraries, and high-speed ETL transformations in PySpark and JAX.

Why this matters in the UAE:

UAE companies frequently deal with bilingual datasets (Arabic + English) and strict PDPL data-handling rules, making preprocessing a core operational skill.

Data Preprocessing & Cleaning – Overview

| Skill | Data Preprocessing & Cleaning |

| What it Involves | Handling missing values, normalization, tokenization, deduplication, filtering, encoding, ETL pipelines |

| Where ML Engineers Need It | LLM training, RAG ingestion, vision/audio pipelines, structured ETL workflows, feature extraction |

| How to Learn | Free Resources: Minimalist Data Wrangling with Python |

14. Feature Engineering

Feature engineering transforms raw data into meaningful features for better model performance. This includes creating new features, selecting important ones, and encoding domain knowledge into the model.

Although deep learning reduces manual feature engineering, it remains critical for tabular data, classical ML models, and hybrid systems that combine classical ML with deep learning or LLM workflows.

Recently, feature engineering has shifted from manual, one-off transformations to automated and scalable workflows. Tools like FeatureTools and AutoFeat enable automatic generation of features from raw data — speeding up development and reducing human error.

Meanwhile, feature‑store frameworks such as Feast make it possible to store, version, and serve consistent features in production. Combined with LLM‑assisted feature creation and embedding‑based representations, these practices allow ML engineers to build robust, repeatable, and scalable feature pipelines suitable for large‑scale ML systems.

Feature Engineering — Overview

| Skill | Feature Engineering |

| What it Involves | Feature creation, feature selection, encoding, scaling, dimensionality reduction, domain-driven features |

| Where ML Engineers Need It | Tabular modeling, classical ML, preprocessing pipelines, feature stores, hybrid ML–LLM systems |

| How to Learn | Free Resources: Feature Tools Documentation (free) |

Modern ML Framework Expertise

15. PyTorch

PyTorch is the leading deep learning framework used by ML engineers to build, train, and deploy neural networks and LLMs. It provides a Python-first interface, dynamic computation graphs, GPU acceleration, and a flexible architecture that makes it ideal for experimentation and fast production deployment. Organizations building and deploying LLMs, multimodal models, and AI services at scale use PyTorch’s distributed‑training backend, TorchScript/ONNX for production deployment, and PyTorch‑native tooling for efficient fine‑tuning.

PyTorch is important because the modern AI ecosystem — including transformer architectures, LLM fine-tuning, diffusion models, multimodal training, and vector-embedding workflows — is built on top of the PyTorch stack. Libraries such as HuggingFace Transformers, Lightning, Diffusers, and vLLM are all PyTorch-native. This makes PyTorch the default skill for ML engineers working on generative AI or large-scale model development.

With PyTorch 2.x and features like torch.compile(), ML engineers can optimize performance with improved GPU/kernel fusion, faster training, and more efficient inference. This enables the development and deployment of large‑scale systems—LLMs, multimodal models, real-time inference services—while reducing compute costs and accelerating time-to-market.

PyTorch has evolved from a research tool to a production-ready framework, making expertise in it essential for scalable AI. The growth of PyTorch/XLA for TPU, PyTorch Lightning Fabric, and tight integration with NVIDIA TensorRT has strengthened its position as the industry’s primary deep-learning framework.

PyTorch – Overview

| Skill | PyTorch |

| What it Involves | Tensors, autograd, neural network modules, GPU acceleration, distributed training, model export (TorchScript/ONNX), LLM fine-tuning frameworks |

| Where ML Engineers Need It | LLM fine-tuning, generative AI, multimodal models, embedding generation, distributed training pipelines, experiment workflow creation |

| How to Learn | Free Resources: PyTorch Official Tutorials (Free) |

16. TensorFlow

TensorFlow is a deep learning framework used widely in large-scale production systems, especially in enterprise environments and mobile/edge deployments. While PyTorch has become dominant in research and LLM development, TensorFlow remains important for ML engineers building scalable systems within legacy infrastructures, regulated industries, or mobile applications.

TensorFlow is important because of its mature production ecosystem — TensorFlow Serving, TensorFlow Lite, and TensorFlow Extended (TFX), which still powers many real-world pipelines in healthcare, finance, electronics, and large enterprises.

According to a 2025 comparative survey, TensorFlow continues to offer the most mature production‑deployment ecosystem — with tools like TensorFlow Serving and TensorFlow Lite for mobile/edge — and remains a top choice for enterprise ML systems requiring scalable, stable deployments.

Recent TensorFlow updates, like TensorFlow Lite for edge deployments, XLA for GPU optimization, and integration with Google’s Vertex AI, enhance model efficiency and scalability. Mastering these features enables expertise in mobile, edge, and cloud-based AI applications, making them highly valuable in real-world AI deployment roles. Understanding these tools ensures learners can contribute to production-ready AI systems.

TensorFlow also continues to support production stability for companies with long-existing TF-based workflows.

TensorFlow – Overview

| Skill | TensorFlow |

| What it Involves | Keras high-level APIs, computational graphs, distributed training, TensorFlow Serving, TensorFlow Lite, TFX pipelines |

| Where ML Engineers Need It | Edge AI, mobile deployment, enterprise ML systems, TFX pipelines, classical DL workloads |

| How to Learn | Free Resources: TensorFlow Official Tutorials (Free) TensorFlow Developer Guide |

17. JAX

JAX is a high-performance deep learning and numerical computing library leverages NumPy-like syntax alongside advanced features such as automatic differentiation, XLA compilation, and native support for distributed training, making it ideal for handling large-scale workloads. Its high-performance capabilities are particularly valuable for tasks in LLM and multimodal model research, enabling efficient scaling and optimization for complex AI systems.

JAX is important because the next generation of large-scale model development — including billion-parameter LLMs, diffusion models, and reinforcement learning systems — relies on high-throughput distributed compute, which JAX handles extremely efficiently. Its functional approach, deterministic behavior, and ability to run seamlessly on multi-host TPU/GPU setups make it the preferred framework at Google DeepMind, OpenAI (for some systems), and Anthropic for compute-heavy workloads.

Recent developments include the rapid adoption of JAX-based frameworks such as Flax, Equinox, T5X, PaLM training stack, and JAX-powered libraries for RL and multimodal models. Google’s TPU v5e and Cloud TPU integration continue to strengthen JAX’s ecosystem.

JAX – Overview

| Skill | JAX |

| What it Involves | JIT compilation, vectorization (vmap), parallelization (pmap, pjIt), functional neural networks (Flax, Haiku), distributed training, accelerator execution |

| Where ML Engineers Need It | Large-scale LLM training, TPU-based workloads, generative models, high-throughput scientific ML, reinforcement learning |

| How to Learn | Free Resources: JAX Official Docs Flax (Neural Network Library for JAX) |

MLOps & Deployment Engineering Skills

18. Docker

Docker is crucial for ML engineers as it ensures consistency across various environments by packaging models, training pipelines, and APIs into portable containers. This eliminates the “works on my machine” issue, enabling reproducibility for training, inference, and large-scale experimentation. Mastering Docker helps ML engineers build scalable, reliable systems that run seamlessly across different platforms, from local setups to cloud environments.

Docker is critical in ML workflows because every production ML system—LLM inference servers, microservices, vector-search engines, and model orchestration tools—runs inside containers. This ensures faster deployment, scalable serving, and reliable integration with cloud or Kubernetes-based ML platforms. ML teams use Docker to isolate model versions, standardize environments, and simplify GPU-based deployments.

As ML moves from experiments to production — with pipelines, deployment, monitoring — having containerized, GPU-ready, reproducible environments simplifies deployment, scaling, and maintenance. Docker + GPU containers become part of the “production ML stack. Modern ML engineers are often expected to bridge development and operations — and Docker is a core DevOps tool; hence this becomes a critical skill.

Docker – Overview

| Skill | Docker |

| What it Involves | Building images, Dockerfiles, containerization, volumes, networking, GPU-enabled containers |

| Where ML Engineers Need It | Model serving, reproducible training pipelines, cloud deployment, RAG systems, GPU inference |

| How to Learn | Free Resources: Docker Guides |

19. Kubernetes

Kubernetes is essential for ML engineers, providing the orchestration needed to deploy and manage large-scale ML workloads. It enables seamless deployment of LLM servers, distributed training, and microservice-based AI systems. Kubernetes ensures that ML pipelines are scalable, fault-tolerant, and optimized for resource management, including GPU allocation and autoscaling, making it a critical tool for modern ML infrastructure.

This skill has become significant for ML because modern AI workloads require distributed, scalable, and resilient infrastructure. It enables ML engineers to run multi-node GPU clusters, auto-scale inference services, orchestrate batch/stream workloads, and manage complex ML pipelines end-to-end.

Recent developments such as Kubernetes-native ML stacks — including Kubeflow for end-to-end pipelines, Ray on K8s for distributed training, KServe for scalable model serving, and NVIDIA’s GPU Operator for automated GPU provisioning — highlight why Kubernetes has become a core skill for modern ML engineers.

These tools all depend on Kubernetes as the orchestration layer, meaning that the ability to deploy, scale, monitor, and manage ML workloads on a distributed cluster is now inseparable from production-grade machine learning.

Without strong Kubernetes skills, ML teams cannot reliably run distributed training jobs, autoscale inference endpoints, manage GPU resources efficiently, or operate resilient ML systems in real-world environments.

Kubernetes – Overview

| Skill | Kubernetes |

| What it Involves | Pods, deployments, services, autoscaling, GPU scheduling, Helm charts, operators |

| Where ML Engineers Need It | LLM serving, distributed training, RAG systems, feature stores, ML microservices |

| How to Learn | Free Resources: Kubernates Documentation |

20. CI/CD Pipelines for ML

CI/CD for ML enables seamless automation of testing, validation, and deployment processes, ensuring smooth transitions from development to production. This is essential for ML systems that require frequent updates to models, data pipelines, and API services to keep pace with evolving data and user needs. By implementing CI/CD, ML engineers can maintain stability and minimize downtime while pushing out continuous improvements.

CI/CD has become essential for ML engineering in 2026, as industries face increasing demands for real-time model updates, especially in sectors like healthcare, finance, and e-commerce. Without robust CI/CD pipelines, issues like model drift, version inconsistencies, and deployment errors can go undetected, affecting critical services such as fraud detection, personalized recommendations, and patient monitoring.

In fact, companies using automated CI/CD pipelines report faster iteration cycles and more reliable AI-driven services.

CI/CD for ML – Overview

| Skill | CI/CD for ML |

| What it Involves | Automated testing, linting, Docker builds, GitHub Actions, GitLab CI, deployment pipelines, rollbacks |

| Where ML Engineers Need It | Model retraining, evaluation pipelines, model deployment, high-frequency inference updates |

| How to Learn | Free Resources: Google MLOps CI/CD Guide |

21. MLflow

MLflow is the standard tool for tracking ML experiments, managing model versions, logging metrics, and deploying models. MLflow helps ML engineers maintain reproducibility across datasets, training runs, and hyperparameters—especially when multiple models are trained simultaneously.

MLflow is critical for managing model lifecycle, ensuring consistency in tracking variants, parameters, and metrics across training and deployment stages. It integrates seamlessly with modern ML tools like Python, PyTorch, TensorFlow, and Kubernetes, making it indispensable for streamlined MLOps pipelines. By enabling efficient model versioning and monitoring, MLflow enhances reproducibility and scalability in production environments.

MLflow remains central to modern ML/LLM‑ops: it gives you experiment tracking, versioned model registry, and reproducible artifact management — essential for any serious production ML workflow.

Through integration with platforms like Databricks (or SageMaker), MLflow lets engineers register, manage, and deploy models (including fine‑tuned LLMs or custom AI models) via unified serving endpoints — turning experiments into production‑ready services. This makes MLflow a core skill for ML engineers aiming for scalable, maintainable, and compliant ML systems — from dev experiments through to production deployments.

MLflow – Overview

| Skill | MLflow |

| What it Involves | Experiment tracking, model registry, reproducibility, artifact storage, deployments |

| Where ML Engineers Need It | LLM fine-tuning logs, model versioning, A/B testing, continuous training pipelines |

| How to Learn | Free Resources: MLflow Official Documentation |

22. Prometheus Monitoring

Prometheus is essential for tracking real-time performance, detecting issues like model drift and inference bottlenecks, and enabling automated rollbacks to maintain system stability. By collecting key metrics, it provides deep insights into resource usage, latency, and scaling efficiency.

As the backbone of observability, Prometheus ensures that ML systems remain reliable by continuously monitoring API performance, GPU load, and infrastructure health in production.

Prometheus supports OpenMetrics‑compatible metric export, enabling standardized telemetry systems — critical for GPU‑powered ML pipelines.

With GPU exporters or LLM‑serving integrations (e.g. vLLM), ML engineers can monitor inference latency, GPU utilization, token throughput, and resource usage in real‑time. This makes Prometheus a central observability engine for ML/LLM production systems, enabling alerting, performance tracking, and scalable deployment monitoring.

Prometheus Monitoring – Overview

| Skill | Prometheus Monitoring |

| What it Involves | Metric scraping, exporters, alert rules, time-series databases, integration with Grafana |

| Where ML Engineers Need It | Monitoring inference servers, GPU nodes, microservices, distributed training systems |

| How to Learn | Free Resources: Prometheus Official Documentation |

23. Grafana Dashboards

Grafana is crucial for monitoring ML systems, providing deep insights into model performance, inference latency, and resource utilization. It transforms raw metrics into actionable visualizations, enabling engineers to detect issues like model drift and optimize system efficiency. By integrating with Prometheus, it ensures continuous observability and drives data‑driven decisions in real time.

- Grafana — especially via OpenTelemetry + Grafana Cloud/AI‑observability integrations — now offers native support for monitoring full AI/ML stacks: from LLM inference latency to vector‑DB performance, GPU resource usage, and system-level health.

- With the addition of GPU‑monitoring dashboards and telemetry for generative‑AI workloads (LLMs, vector databases, distributed inference/training), engineers can spot issues — from latency spikes to GPU bottlenecks — before they impact production.

- For ML engineers building real-world AI systems, this means observability goes beyond traditional logs/metrics; Grafana enables continuous tracking of model performance, resource utilization, and inference quality, making it a core operational skill — not optional.

Grafana Dashboards – Overview

| Skill | Grafana Dashboards |

| What it Involves | Dashboard creation, panels, alerts, Prometheus integration, time-series visualization |

| Where ML Engineers Need It | Monitoring model latency, drift, token throughput, GPU metrics, pipeline health |

| How to Learn | Free Resources: Grafana Official Documentation |

| Data Analytics AI and ML Course | Program Duration: 10 Months |

| Master industry-ready AI, ML, and Data Analytics skills to solve real-world business and technology challenges with confidence. Through structured modules, hands-on projects, and guided practice, you’ll learn data analysis, machine learning algorithms, predictive modeling, Python programming, and AI-driven problem-solving from the ground up. Build the expertise to analyze data, train ML models, interpret insights, and deploy solutions that create meaningful impact. 2000+ learners enrolled | Live Instructor-Led | Skills you’ll build: Machine Learning Fundamentals, Supervised & Unsupervised Learning, Neural Network Basics, Python Programming for AI, Data Wrangling & Cleaning, Exploratory Data Analysis (EDA), Statistical Modeling, Regression & Classification, Clustering & Dimensionality Reduction, Predictive Analytics, Deep Learning Essentials, Model Evaluation & Optimization, Business & Healthcare Analytics, Data Visualization & Insight Reporting |

LLM, Generative AI & Advanced ML Skills

24. Fine-tuning LLMs (LoRA, QLoRA, PEFT)

Fine-tuning LLMs with LoRA, QLoRA, and PEFT is about adapting huge foundation models to specific tasks without updating all their billions of parameters. Instead of full fine-tuning, these methods add or tweak a small number of extra parameters (adapters) on top of a frozen base model. That’s the only reason fine-tuning 7B–70B+ models on a single or few GPUs is even practical now.

- LoRA (Low-Rank Adaptation) freezes the original model weights and injects tiny low-rank matrices (adapters) into selected layers. This can reduce trainable parameters by up to 10,000× and cut GPU memory by ~3× compared to full fine-tuning, while keeping similar performance.

- QLoRA combines quantization + LoRA: the base model is loaded in 4-bit precision, gradients flow into LoRA adapters, and memory savings are large enough to fine-tune a 65B model on a single 48 GB GPU while matching 16-bit fine-tuning quality.

- PEFT (Parameter-Efficient Fine-Tuning) is the general approach (and also a Hugging Face library) that implements LoRA, QLoRA and similar methods so you can fine-tune only a small subset of parameters and still get near full fine-tuning performance.

These techniques are critical in modern ML because most organizations don’t train LLMs from scratch—they fine-tune existing models (LLaMA, Mistral, Gemma, etc.) on private data, domain-specific instructions, or enterprise use cases. LoRA/QLoRA/PEFT lets you do that on commodity hardware, reuse the same base model with different lightweight adapters, and ship task-specific variants without storing dozens of full models.

Recent developments include Hugging Face’s PEFT library becoming a standard for adapter-based fine-tuning, LoRA/QLoRA being integrated into LLaMA and other LLM recipes from Meta and others, and a wave of tutorials and open repos that show end-to-end fine-tuning on a single GPU or even Colab.

Fine-tuning LLMs – Overview

| Skill | Fine-tuning LLMs (LoRA, QLoRA, PEFT) |

| What it Involves | LoRA adapters, 4-bit quantization (QLoRA), PEFT methods, configuring rank/α/dropout, loading and merging adapters, task-specific instruction or domain fine-tuning |

| Where ML Engineers Need It | Custom chatbots, domain-specific copilots, RAG systems, enterprise LLMs, multilingual models, low-resource fine-tuning, shipping specialized variants of base models |

| How to Learn | Free Resources: LoRA paper (original) QLoRA paper Hugging Face PEFT docs (main entry) Hugging Face LoRA conceptual guide |

25. Prompt Engineering

Prompt engineering is the skill of writing precise, structured instructions that steer LLMs to produce outputs that are useful, reliable, and safe. Instead of changing model weights, you “program” the model through natural-language prompts: roles, goals, constraints, examples, and step-by-step reasoning. OpenAI defines prompt engineering as the process of writing effective instructions, so a model consistently generates content that meets your requirements.

Prompt engineering is important because every LLM system—fine-tuned or not—still depends on prompts: system messages, tool-calling formats, RAG query templates, evaluation prompts, and safety guards. Good prompts improve factual accuracy, reduce hallucinations, produce more structured outputs (JSON, SQL, code), and make LLM agents more controllable. In production ML, prompt patterns (few-shot examples, chain-of-thought, ReAct, persona prompts, guardrails) are now part of the core interface between models and applications.

Recent developments include: official prompt engineering best-practices from OpenAI, Azure OpenAI, and others; academic work on prompt patterns and catalogs; and widely-used free courses (like the Andrew Ng + OpenAI “ChatGPT Prompt Engineering for Developers”) that formalize prompt techniques like role prompting, step-by-step reasoning, tool-use, and evaluation prompts.

Prompt Engineering – Overview

| Skill | Prompt Engineering |

| What it Involves | Designing system + user prompts, specifying roles/goals/constraints, few-shot prompting, chain-of-thought, ReAct, tool-calling prompts, structured-output formats (e.g., JSON), evaluation prompts |

| Where ML Engineers Need It | Chatbots, RAG pipelines, LLM agents, copilots (code, data, productivity), content generation, safety and guardrails, model evaluation and A/B testing |

| How to Learn | Free Resources: OpenAI Prompt Engineering Guide (official) OpenAI “Best practices for prompt engineering” (help center) Azure OpenAI – Prompt engineering concepts PromptingGuide.ai (community prompt engineering guide) |

Conclusion

As the UAE accelerates large-scale AI deployment, the expectations from ML engineers are becoming unmistakably clear: strong fundamentals, fluency in modern frameworks, and the ability to deliver systems that work reliably outside controlled environments. These 25 skills reflect those expectations and outline what it now takes to stay relevant in a rapidly evolving landscape.

If you’re preparing for a future career in ML engineering or aiming to build the foundations needed to eventually move into this field, the smartest approach is to focus on depth rather than chasing every new tool. Start by developing solid end-to-end projects and strengthening the core concepts that underpin modern AI systems. The market consistently rewards professionals who demonstrate clear problem-solving ability and practical execution — not scattered familiarity.

NST-Dubai’s AI and ML Training is designed for learners who want a strong, practical starting point in modern AI and machine learning. The program covers the essential building blocks of the field — Python programming, data preparation, classical ML algorithms, deep learning with TensorFlow/Keras, NLP fundamentals, and the basics of model deployment using Flask, FastAPI, Docker, and cloud platforms like AWS. You’ll work with real datasets, build end-to-end ML and DL projects, and develop portfolio-ready applications that show your ability to apply these concepts in real scenarios.

The future of AI is taking shape now — and building strong fundamentals is the first step to being part of it.